How to Build a Winning AI Platform for 2026

At A-CX, we have implemented several winning AI platforms, both in-house and for our clients. In the early days of AI, we encouraged people to jump from slides to action with Approachable AI.

Since then, both customer expectations and AI technologies have undergone significant evolution. The recipe for 2026 success in building a winning AI platform is Go big or go home! This blog post summarizes why and how to do it. For the why, we refer to industry studies. The how part is based on our customer stories. Read on and learn more!

What High-Performing Enterprise AI Organizations Do Differently

McKinsey identifies a group of AI high performers, respondents to their study who reported 5% or more of earnings before interest and taxes (EBIT), and “significant value” is attributed to the use of AI. What characterizes them?

- They set growth or innovation as additional objectives besides cost.

- They are 2.8x as likely to fundamentally redesign their workflows with AI.

- They are 3.6x as likely use AI for enterprise-wide transformative change.

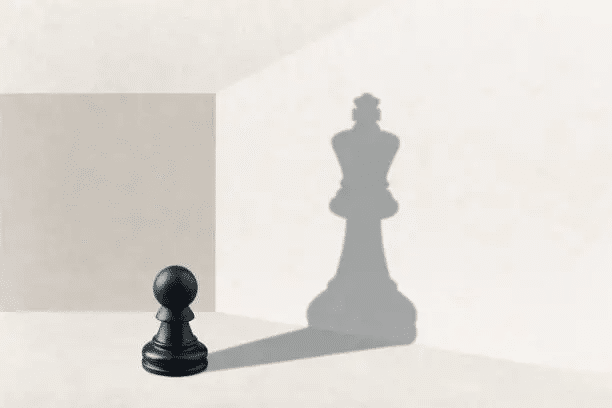

Remember that high ambition and a willingness to drive meaningful changes are key to success!

What Hinders Enterprise AI Growth?

Two thirds of the organizations have not yet begun scaling AI across the enterprise, according to McKinsey. So, why are so many of us still in the experimentation phase? In the same study, 88% of organizations report using AI in their business. It’s been three years since ChatGPT broke through and Gartner estimates global AI spend to be $1.5 Trillion this year. If we have collectively had the time, money and the effort, what’s lacking?

Common Enterprise AI Implementation Concerns from Our Customers

We have worked on multiple AI projects with our clients, and naturally, approached the challenge from a customer experience perspective. Every case is different, but here are some common themes that our customers have raised as concerns.

Build an AI Portfolio, Not Just One-Off Demos

Building a stand-alone demo or MVP means finding shortcuts. Building a collection of stand-alone products means duplicating efforts. If you set your ambition higher (think enterprise-wide transformative change, as mentioned above), you start to find economies of scale.

Aim High to Compete with AI High Performers

An MIT study reported that roughly 95% of enterprise generative AI initiatives showed no measurable profit and loss (P&L) impact. Unfortunately, you’ll be competing against the successful 5%, so doing nothing won’t be your winning strategy. Aim to be an AI high performer!

Don’t Expect Human Behavior from AI Agents

If it looks like a human and behaves like a human, it must be a human. Wrong. At first glance, an AI system can seem to meet all expectations. It’s the corner cases that bring up their shortcomings and the lack of quality assurance they’ve been exposed to. A human is guided by decades of education and learning. We apply contextual understanding by default, considering budget limitations and the limits of our authorization. Even if we’re not explicitly told, we assume that not all information can be shared with everyone. This may be an internal policy and best practice or based on non-disclosure agreements (NDAs) and contracts.

Redesign Ownership for Human and Agentic Resources

Replacing human capacity with AI (e.g., agent capacity) changes the failure profile. When a person leaves, managers redistribute work, HR starts a backfill process, and the budget flows to a replacement. That muscle is well practiced. Agents have no HR function. When an agent breaks, you need a budget, a partner, or someone in-house to fix the broken agent, and a clear ownership path.

One agent can represent the work of several people, so that an outage can overwhelm remaining staff and systems. We must plan for this upfront, including ownership, runbooks, and a path to fund repairs, not just new features. Similar challenges apply when onboarding AI agents or preparing them for organizational or process changes. Instead of Human Resources, we should start thinking about Human and Agentic Resources.

Coordinate AI Across Teams to Avoid Siloed Apps

Imagine having several stand-alone AI apps that rely on the same underlying technology. One day, that API will be sunset, or that connector won’t work. If each AI app is implemented in isolation, you’re in a whole lot of trouble. You’d have to investigate several apps, each claiming priority. You’d need to find someone to fix them. So, you have not only wasted development resources when duplicating the efforts. You may have disrupted several teams’ work with no backup plan.

Build Agility into Your Vendor Strategy

AI models and the surrounding tech stack evolve at unprecedented speed. Today’s market leader can feel outdated tomorrow. We’re in a rare phase where best-in-class business services are often priced like consumer tools, but that land-grab pricing won’t last. As investors and boards shift focus from growth at all costs to return on investment, pricing and terms will change—just like ride-share prices rose after undercutting traditional taxis. Vendors can end-of-life products, run into security or compliance issues, or tighten availability with little notice. A winning AI platform assumes this volatility. It’s designed to shorten the cycle time for swapping services or vendors, limit vendor lock-in, and reduce wasted effort when a bet on a specific model or provider no longer makes sense.

What Are AI Agents in the Enterprise?

One key term we should define at this point is an AI Agent. If you allow one more reference to the McKinsey study, it found that 62% of organizations are at least experimenting with AI agents. Surprisingly, among all the companies, IBM gives my favorite definition. “An AI agent is a system that autonomously performs tasks by designing workflows with available tools.”

An AI agent works toward a goal by looping observe → reason → decide → act → observe. An effective agentic execution is characterized by:

Autonomy with guardrails. Moves work forward without handholding while staying within permissions, budgets, and approvals you set.

Dynamic workflows. Not a fixed script; selects and sequences steps on the fly, such as search, retrieve, draft, call an API, validate, and escalate.

Your tools. Uses the same systems your people use: docs, tickets, customer relationship management (CRM), human resources information system (HRIS), email, internal APIs.

How does this differ from “just code”? Traditional automation runs a predefined path. Agents choose the best path at runtime. That flexibility is what dazzles in demos but also produces edge-case surprises in production. We want natural inputs but also expect hardened outputs. Agents, however, do not possess decades of institutional memory like individuals or a single, rigid rulebook like code. Think of agents as talented interns. They are often brilliant, sometimes surprising. The job is to harness that initiative safely.

Characteristics of a Winning AI Platform

As the next step we want to define the characteristics of a winning AI platform. How do we define winning? “A Winning AI platform establishes a reusable, modular AI platform which transitions AI initiatives from experimental prototypes to governed, production-grade assets.” The resulting environment enables reliable innovation, maintains service continuity, and ensures that AI investments remain adaptable, secure, and cost-efficient. Its business benefits are:

Swap AI Components Without Breaking the Platform

The platform supports replacing or upgrading core AI components—such as LLM (large language model) engines, SDKs (Software Development Kits), or vendor APIs—without disrupting dependent systems. It allows migration between public and private AI models through configuration settings. All components are versioned to ensure controlled rollout of new capabilities.

Design for Resilient Day-to-Day AI Operations

The platform standardizes integration points and shared tools to simplify maintenance. It provides centralized configuration control and can automatically roll back changes if issues occur. Shared services like API gateways, AI tools, and prompt libraries include clear rules for monitoring, logging, and error handling.

Keep Every AI Experience On-Brand and Compliant

The platform enforces unified governance so all AI interactions and generated content follow company principles of fairness, reliability, safety, privacy, and accountability. Centralized configuration defines branding, tone, and visual identity for internal and white-label implementations. Every use case inherits guardrails and ethical guidance aligned with Microsoft AI principles and data-protection regulations.

Practical AI Challenges and How to Fix Them?

It may be easier to understand the benefits of the platform through practical real-life examples. The challenges and solutions below are based on multiple customer projects. To set the context, consider one of our early AI implementations. We aimed to enhance job application streamlining the process, allocating more time to top candidates, processing by hand, and reducing the time spent on poor matches. We built an agent that accesses the HR system’s candidate pool, compares the applications and resumes with the job description. Accordingly, the system applied a star rating, a recommendation whether to proceed, an executive summary, and pros and cons. In a way, it processes information so that it’s uniform and actionable.

Fix Broken APIs and Expired Credentials with an API Gateway

The API of the LLM we had chosen to power the system abruptly reached the end of life (EOL). Alarmingly, not once, but twice a year. The shelf life of LLMs is significantly shorter than that of traditional technologies. Concurrently, their EOL seems to happen unpredictably and without much notice.

A related problem is the use of time-limited credentials to connect to the functional application, such as an HR system. Time-limited credentials do serve a purpose; someone needs to manage them.

You can imagine the business impact if there weren’t a capable maintenance team behind this when the application programming interface (API) breaks or credentials expire. The whole recruitment process would halt or slow down. Now imagine if this is a common API used by multiple systems, or if credentials are scattered across these systems.

How to Make it Work? API Gateway. We’d recommend a central point for managing external APIs and acting as a secure gateway for internal AI models. It supports inbound calls via webhooks and serves as the foundation for future MCP servers.

Fix Inconsistent AI UX with Shared UI Libraries

Before Agentic time, Brand teams were gatekeepers for launching new deliverables. User experience (UX) experts ensured the products and their User Interface (UI) were usable. AI projects are often rushed in many organizations by people who aren’t accustomed to this level of rigidity. AI agents may not even be aware of the brand. How do you enforce a uniform look and feel, familiar usability, and your brand promise?

How to Make it Work? UI Libraries. These include shared interface elements for dashboards, Teams apps, and web views. Having one central library makes the user experience consistent. It also makes the apps easy to build and maintain.

Fix Ineffective Prompts with a Central Prompt Library

In the early days of this solution, prompting wasn’t an effective way to control the output, but that has changed. But how can users be made aware and capable of using effective prompts? Better yet, how can we hide this complexity from end users to the extent possible?

How to Make it Work? Prompts Library. The idea is to introduce system-level prompts, like best practices, and take the burden of effective prompt generation away from the individual users. It’s a centralized repository for reusable prompt templates, evaluation data, and tuning configuration.

Fix Security & Confidentiality Risks with M365 & Entra Integration

Now this can be scary. What if you accidentally grant unauthorized individuals access to sensitive data? This could happen in many ways. Maybe in the rush of building an AI solution, your engineering team takes some shortcuts or skips some reviews? What if the incorrectly configured access was always there, but nobody knew? Can you honestly say you’re 100% confident that the wrong people don’t have access to some SharePoint folders or files? AI is very good at finding information, unlike us humans. It’ll expose all kinds of secrets.

How to Make it Work? Microsoft 365 (M365) & Entra Integration. We’d suggest enabling secure access to company data and Graph APIs (Teams, SharePoint, Outlook) for analysis and authentication.

Ready to Build Your Winning AI Platform?

We’ve shared how high-performing organizations use AI platforms and agents to move beyond pilots.

Want us to help? Book a meeting with us via the contact form, and let’s explore what a winning AI platform could look like in your organization.