We created an MVP audio plugin capable of creating spatialized audio and recording it in the Eclipsa Audio format. Our client could then use this MVP to expand the uptake of Eclipsa Audio’s IAMF file format.

Our client

The challenge

Our client was looking to add support for spatial audio playback to their existing software and services. In order to provide the best experience for their customers the client planned to use the new Eclipsa Audio format based on the Immersive Audio Model and Formats (IAMF) specification, a brand new file format for recording surround sound audio. However, because IAMF is a brand new format no tools existed for recording audio in this format.

In order to get IAMF format audio the client needed tools for recording surround sound, or spatial audio, in this new format. Rather than create a new recording tool, the client decided to instead augment the existing tools by creating a plugin capable of writing IAMF files. Audio engineers would then be able to record audio in the IAMF format using tools they were already familiar with. The client planned to initially release the plugin for the ProTools DAW (Digital Audio Workstation). Many senior audio engineers use the ProTools DAW in their daily work and the client hoped that they would be able to provide an MVP to these engineers. The MVP could then be used to help record IAMF files as well as ascertain how easy the IAMF file format would be to work with.

How we helped

The project had 3 main objectives:

- Create a plugin capable of both spatializing audio and recording this spatial audio information in Eclipsa Audio compatible IAMF files.

- Help design a user-friendly interface audio engineers could use to create and record spatial audio.

- Release a functioning plugin capable of being distributed to a wide audience of audio engineers.

We decided the best approach would be to first discuss the MVP with the professional audio engineers who would be using it in order to understand what capabilities they would need to mix spatial audio. We also wanted to understand any pain points they had with the currently available spatial audio formats. Once we were confident we understood how the audio engineers wanted the tool to function we planned to design our user interface and plugin architecture. Meanwhile, we would work on building a back end recording engine capable of creating IAMF files. Finally, we would implement the UI and tie it to our recording engine using our plugin architecture. We would then build installers to distribute the plugin to the audio engineers.

Conducting Interviews

Our first step was to conduct interviews with the audio engineers who would be using the plugin. We set up a series of meetings to discuss what these engineers’ current workflows were like and what tools they already used to map audio into 3D space. What we learned was that the audio engineers often struggled with small UI components and difficulty monitoring audio when it was mapped to multiple speakers. We also learned that workflows varied substantially from engineer to engineer. Many audio engineers had very custom setups for recording audio so in order to slot our plugin into these setups we would need to provide a high degree of flexibility in how we created and recorded audio. Finally, engineers complained of performance issues with several of the existing tools. This suggested that whatever we built needed to also take performance and resource usage into consideration.

Understanding Eclipsa Audio and the IAMF File Format

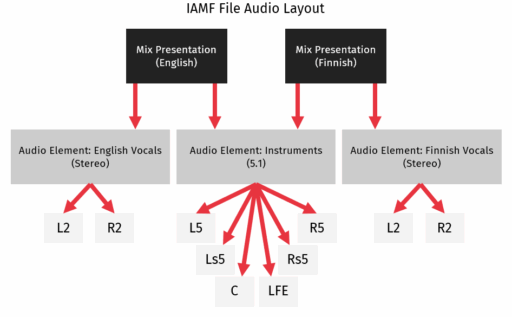

After conducting interviews, we set about understanding the IAMF file format. Generally, IAMF files are composed of 3 parts:

- Mix Presentations: Each mix presentation represents a method of playing back the audio in the IAMF file. A mix presentation is composed of one or more audio elements.

- Audio Elements: Each audio element is associated with a speaker layout or ambisonics audio sphere to be used during playback. These layouts, and the audio associated with them, is how 3D information is captured by the IAMF file format. Each speaker in an audio element has an associated audio stream. Each of these speaker layouts or ambisonic audio spheres are converted to the layout of the playback hardware speakers during playback. By converting during playback, a wider variety of playback hardware setups can be supported.

- Audio Streams: An audio stream contains the actual audio to be played on a specific speaker for a specific audio element.

The diagram shows an example IAMF file layout containing two mix presentations, one for English and one for Finnish. When playing back the IAMF file, one of these two presentations is selected and then the audio associated with the audio elements making up the selected mix presentation is played back.

While the IAMF file format is much more complex than just these 3 pieces, by hiding much of the complexity from our users we aimed to simplify the file creation process to the creation of the above elements and the recording of the audio.

Designing the audio plugins

Once we understood our users’ needs and how the IAMF file format captured spatialized audio we started designing the plugin architecture and UI. For the user interface, we drew inspiration from other existing tools in this space as well as the outcomes from our interviews with the audio engineers. We ensured that we were using larger UI elements and incorporating substantial monitoring capabilities into our UI in order to assuage some of the audio engineers biggest pain points.

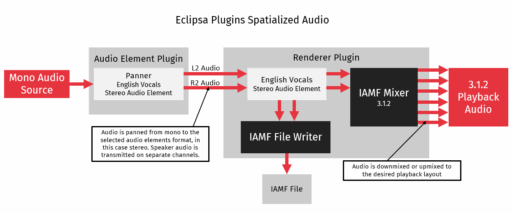

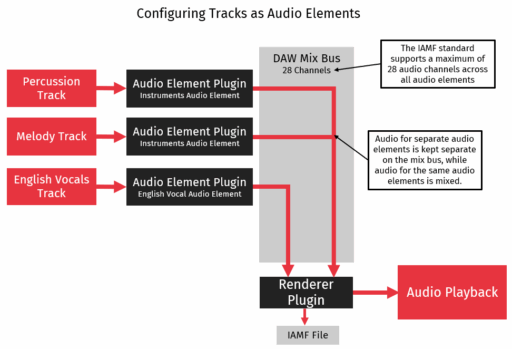

For the architecture we found that we would need to separate our plugin into two distinct plugins. One plugin, the audio element plugin, would be responsible for assigning track audio to an audio element and, optionally, spatializing the track’s audio. We could then use a second plugin, the renderer, to record the spatialized audio from all the tracks and write it to an IAMF file.

To implement this architecture we needed to configure and record audio for each audio element. The problem was that while each track might be associated with a separate audio element, DAWs like ProTools automatically mix all audio for a specific speaker together. This meant that we needed to separate our audio elements audio onto separate channels on the mix bus to avoid the DAW performing unwanted mixing before we could record our audio.

In order to avoid this unwanted mixing, we used a 5th order ambisonics mix bus, a much wider bus than our audio playback format. By using the DAWs mix bus in this way, we were able to leverage the DAWs internal mixing for mixing audio between tracks using the same audio element while keeping audio associated with different audio elements separate.

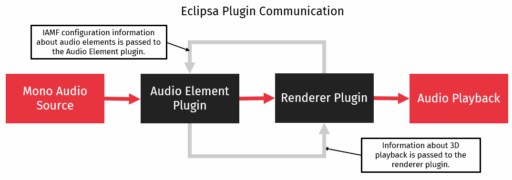

Handling audio plugin communication

After we determined two separate plugins would be needed we set about determining how they would communicate with each other. The Renderer plugin would need to communicate to the Audio Element plugins what audio elements were available and what channels those audio elements were using. Similarly, the Audio Element Plugin would need to publish real-time information about where the audio was in 3D space for monitoring on the Renderer.

We ended up using a Publish/Subscribe model for communication, creating two pub/sub channels. On one channel, the Renderer plugin would publish information about audio elements to all the Audio Element plugins and on the other channel the Audio Element plugins would publish information about how they were panning the audio for the Renderer plugin to subscribe to. We used a combination of the JUCE and ZeroMQ libraries to achieve this communication.

Monitoring, recording and writing

Once we had the architecture in place and the UI designed we began building the different components for each of the plugins. For writing the IAMF files themselves we used a combination of IAMF libraries and JUCE libraries to write out individual audio element audio to separate files and then combine them together into a single IAMF file. For monitoring playback we were able to use a combination of tools JUCE provides as well as some custom UI code to create a set of different 3D perspectives for monitoring both individual speaker audio levels as well as the overall layout of the audio sources in 3D space. Finally, we added automation capabilities to allow for the movement of audio in 3D space during audio playback.

One of the major challenges was downmixing and upmixing audio between the various formats. Because many audio elements make up an IAMF file, and because these audio elements may have different speaker layouts, we needed an easy way to mix all this audio to the desired playback format and then mix it all together. We were able to set up a system of matrix multiplications which could be used to convert between different audio channel layouts. With this set of matrices in place we were able to mix the audio element audio together and upmix or downmix to the desired playback speaker layout with very low latency, assuaging our audio engineers concerns about high performance overhead.

Integrating the audio plugins with ProTools

After developing the plugins the last step was to integrate the plugin with the targeted DAW, ProTools. ProTools required that we go through an extensive signing process, including using a hardware signing tool, in order to allow the plugin to run. We created installers and then went through the signing process. We acquired development certificates both from ProTools and Apple for signing our plugin and its installers so that no security issues would be raised during the installation process.

Finally, once the installers were signed, we went through a thorough process of integration testing to ensure that there were no issues with the MVP. While we had been testing throughout the development process, once we had the installers we made sure to perform full end-to-end tests to make sure that no issues had been introduced or were still unfixed.

Deliverables

The deliverable of this project was a minimal viable product which could spatialize audio, write IAMF files, and be installed in the ProTools DAW. The final solution consisted of the following components:

- A Renderer plugin capable of configuring Audio Elements and MIx Presentations for an IAMF file and then recording audio to an IAMF file while also providing monitoring during the recording process.

- An Audio Element plugin capable of assigning an Audio Element to a track of audio as well as optionally allowing the user to place the audio source in 3D space.

- A single signed installer which installed both plugins in the ProTools DAW.

The solution was successfully tested and validated by the clients team.

Future Work

The client was happy with the resulting MVP and was eager to start the distribution process once development work had been completed. We are now helping the client with the distribution process, including setting up a distribution website and creating user facing documentation. We are also starting on the process of gathering feedback from audio engineers to further improve the initial product as well as upgrading the MVP to support the newest changes to the IAMF standard.

Please contact us for more information about this case study or how we can implement Eclipsa Audio technologies in your business.

Date

11/2024

Languages

C++

Frameworks

JUCE

Protobuf

ZeroMQ

iamf-tools

OBR

Tools

Visual Studio Code

Avid ProTools

Environment

MacOS

Discuss your project

Great things happen when good people connect. Leave us your details, and we’ll get back to you.

By sending the information in this form, you agree to have your personal data processed according to A-CX’s Privacy policy and Cookie policy to handle the request and respond to it.